On Difficulties of Cross-Lingual Transfer with Order Differences: A Case Study on Dependency Parsing

Published in NAACL, 2019

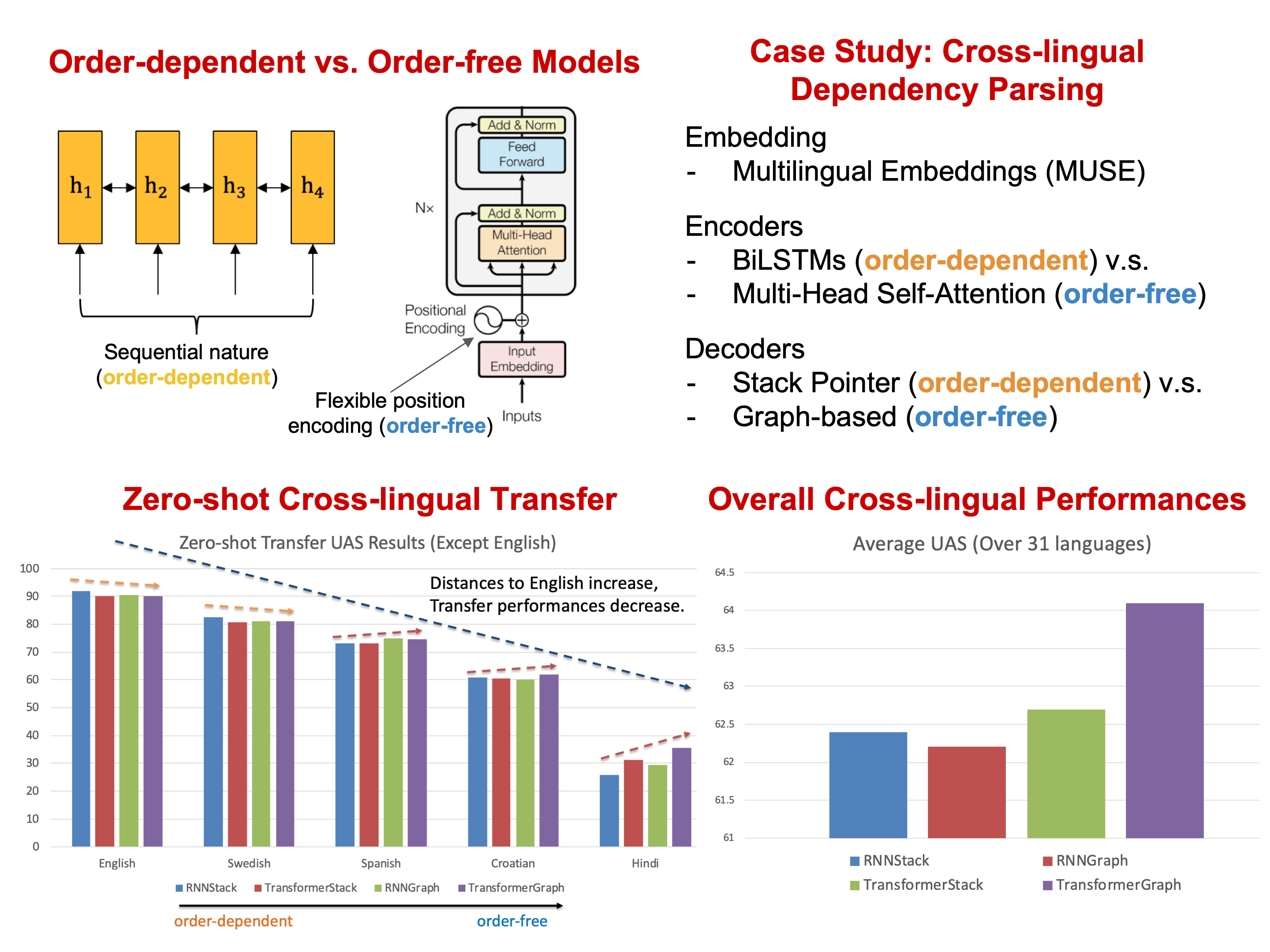

Different languages might have different word orders. In this paper, we investigate crosslingual transfer and posit that an orderagnostic model will perform better when transferring to distant foreign languages. To test our hypothesis, we train dependency parsers on an English corpus and evaluate their transfer performance on 30 other languages. Specifically, we compare encoders and decoders based on Recurrent Neural Networks (RNNs) and modified self-attentive architectures. The former relies on sequential information while the latter is more flexible at modeling word order. Rigorous experiments and detailed analysis shows that RNN-based architectures transfer well to languages that are close to English, while self-attentive models have better overall cross-lingual transferability and perform especially well on distant languages.